Learn how to set up Apache Spark on IBM Cloud Kubernetes Service by pushing the Spark container images to IBM Cloud Container Registry….

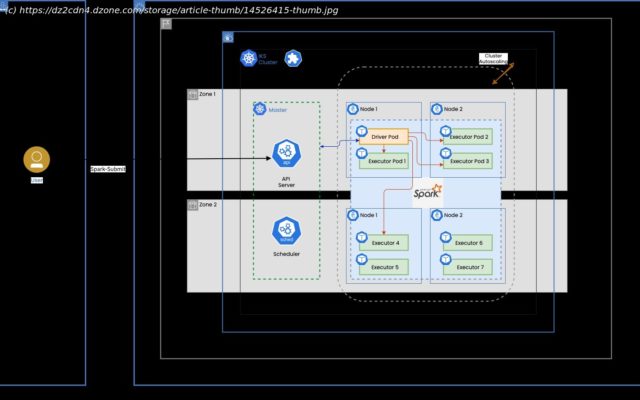

Join the DZone community and get the full member experience. Let’s begin by looking at the technologies involved. Apache Spark (Spark) is an open source data-processing engine for large data sets. It is designed to deliver the computational speed, scalability and programmability required for Big Data – specifically for streaming data, graph data, machine learning and artificial intelligence (AI) applications. Spark’s analytics engine processes data 10 to 100 times faster than alternatives. It scales by distributing processing work across large clusters of computers, with built-in parallelism and fault tolerance. It even includes APIs for programming languages that are popular among data analysts and data scientists, including Scala, Java, Python and R. Kubernetes is an open source platform for managing containerized workloads and services across multiple hosts. It offers management tools for deploying, automating, monitoring and scaling containerized apps with minimal-to-no manual intervention. IBM Cloud Kubernetes Service is a managed offering to create your own Kubernetes cluster of compute hosts to deploy and manage containerized apps on IBM Cloud. As a certified Kubernetes provider, IBM Cloud Kubernetes Service provides intelligent scheduling, self-healing, horizontal scaling, service discovery and load balancing, automated rollouts and rollbacks, and secret and configuration management for your apps.