Steve Wozniak and Stuart Russell were among the signatories of an open letter warning advanced models pose “profound risks to society and humanity.”

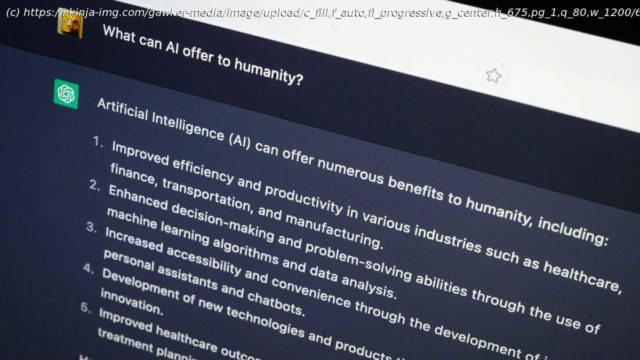

A wide-ranging coalition of more than 500 technologists, engineers, and AI ethicists have signed an open letter calling on AI labs to immediately pause all training on any AI systems more powerful than Open AI’s recently released GPT-4 for at least six months. The signatories, which include Apple co-founder Steve Wozniak and “based AI” developer Elon Musk, warn these advanced new AI models could pose “profound risks to society and humanity,” if allowed to advance without sufficient safeguards. If companies refuse to pause development, the letter says governments should whip out the big guns and institute a mandatory moratorium.

“Advanced AI could represent a profound change in the history of life on Earth, and should be planned for and managed with commensurate care and resources,” the letter reads. “Unfortunately, this level of planning and management is not happening, even though recent months have seen AI labs locked in an out-of-control race to develop and deploy ever more powerful digital minds that no one—not even their creators—can understand, predict, or reliably control.”

The letter was released by The Future of Life Institute, an organization self-described as focused on steering technologies away from perceived large-scale risks to humanity. Those primary risk groups include AI, biotechnology, nuclear weapons, and climate change. The group’s concerns over AI systems rest on the assumption that those systems, “are now becoming human-competitive at general tasks.” That level of sophistication, the letter argues, could lead to a near future where bad actors use AI to flood the internet with propaganda, make once stable jobs redundant, and develop “nonhuman minds” that could out-complete or “replace” humans.

Emerging AI systems, the letter argues, currently lack meaningful safeguards or controls that ensure they are safe, “beyond a reasonable doubt.” To solve that problem, the letter says AI labs should use the pause to implement and agree on a shared set of safety protocols and ensure systems and audited by an independent review of outside experts. One of the prominent signatories told Gizmodo the details of what that review actually looks like in practice are still, “very much a matter of discussion.” The pause and added safeguards notably wouldn’t apply to all AI development. Instead, it would focus on “black-box models with emergent capabilities,” deemed more powerful than Open AI’s GPT 4. Crucially, that includes Open AI’s in-development GPT 5.

“AI research and development should be refocused on making today’s powerful, state-of-the-art systems more accurate, safe, interpretable, transparent, robust, aligned, trustworthy, and loyal,” the letter reads.

AI skeptics are divided on the scale of the threat

Gizmodo spoke with Stuart Russell, a professor of computer science at Berkeley University and co-author of Artificial Intelligence: a Modern Approach.

Home

United States

USA — software 500 Top Technologists and Elon Musk Demand Immediate Pause of Advanced AI...