Large language models (LLMs) are an evolution of natural language processing (NLP) techniques that can rapidly generate texts closely resembling those written by humans and complete other simple language-related tasks. These .

Large language models (LLMs) are an evolution of natural language processing (NLP) techniques that can rapidly generate texts closely resembling those written by humans and complete other simple language-related tasks. These models have become increasingly popular after the public release of Chat GPT, a highly performing LLM developed by OpenAI.

Recent studies evaluating LLMs have so far primarily tested their ability to create well-written texts, define specific terms, write essays or other documents, and produce effective computer code. Nonetheless, these models could potentially help humans to tackle various other real-world problems, including fake news and misinformation.

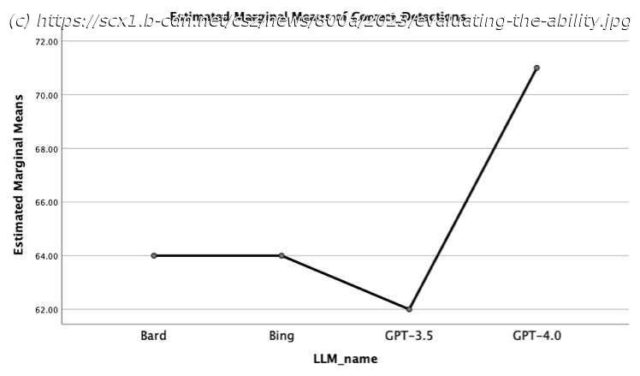

Kevin Matthe Caramancion, a researcher at University of Wisconsin-Stout, recently carried out a study evaluating the ability of the most well-known LLMs released to date to detect if a news story is true or fake. His findings, in a paper on the preprint server arXiv, offers valuable insight that could contribute to the future use of these sophisticated models to counteract online misinformation.

“The inspiration for my recent paper came from the need to understand the capabilities and limitations of various LLMs in the fight against misinformation,” Caramancion told Tech Xplore. “My objective was to rigorously test the proficiency of these models in discerning fact from fabrication, using a controlled simulation and established fact-checking agencies as a benchmark.