In April this year, Microsoft announced Phi-3 family of small language models (SLMs). The Phi-3-mini with a 3.8 billion parameter language model is trained on 3.3 trillion tokens and it beats Mixtral 8x7B and GPT-3.5. Microsoft’s recently announced Copilot+ PCs which use large language models (LLMs) running in Azure Cloud in concert with several of […]

In April this year, Microsoft announced Phi-3 family of small language models (SLMs). The Phi-3-mini with a 3.8 billion parameter language model is trained on 3.3 trillion tokens and it beats Mixtral 8x7B and GPT-3.5. Microsoft’s recently announced Copilot+ PCs which use large language models (LLMs) running in Azure Cloud in concert with several of Microsoft’s world-class small language models (SLMs) to unlock a new set of AI experiences you can run locally, directly on the device.

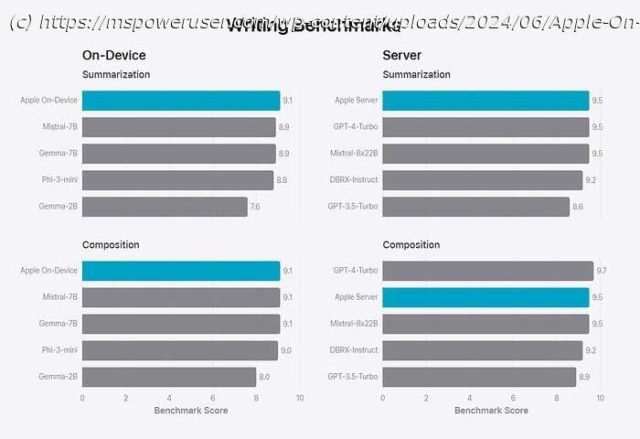

At WWDC 2024, Apple announced Apple Intelligence (its Copilot alternative) for its devices. Apple Intelligence is powered by multiple highly capable generative models. Similar to Microsoft’s approach, Apple uses both On-Device models and server-based models. Yesterday, Apple detailed two of the foundation models it is using to power Apple Intelligence.

Home

United States

USA — software Apple's On-Device model performs better than Microsoft's Phi-3-mini, Server model comparable to...