At Gartner’s annual expo, analysts offer a deeper dive into how businesses should approach AI, from when to avoid gen AI and how to scale for a future dominated by the technology.

Not surprisingly, AI was a major theme at Gartner’s annual Symposium/IT Expo in Orlando last week, with the keynote explaining why companies should focus on value and move to AI at their own pace. But I was more interested in some of the smaller sessions where they focused on more concrete examples, from when not to use generative AI to how to scale and govern the technology to the future of AI. Here are some of the things I found most interesting.When Not to Use Generative AI

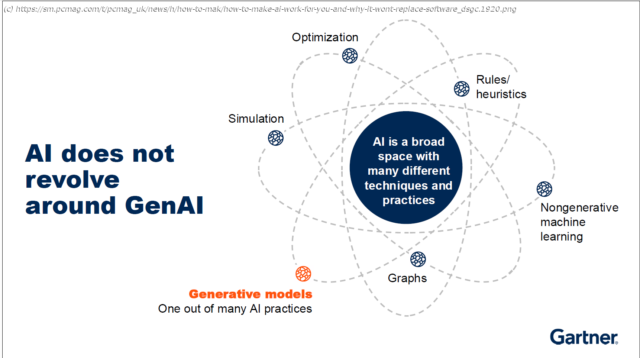

“AI does not revolve around gen AI, although it might feel like it right now”, Gartner Fellow Rita Sallam said in a presentation entitled “When Not to Use Generative AI.” She noted that while boards may now be asking technology leaders to use generative AI, in reality many organizations have used AI of different kinds for many years, in things such as supply chain optimization, sales forecasting, and fraud detection.

Sallam shared data from a recent survey that showed that gen AI is already the most popular technique that organizations are using in adopting AI solutions, followed by machine learning with things like regression techniques.

She stressed that generative AI is very useful for the right use cases, but not for everything. She said it was very good at content generation, knowledge discovery, and conversational user interfaces; but has weaknesses with reliability, hallucinations, and a lack of reasoning. Generative AI is probabilistic, not deterministic, she noted, and said it was at the “peak of inflated expectations” in Gartner’s hype cycle.

She warned that organizations that solely focus on gen AI increase the risk of failure in their AI projects and may miss out on many opportunities.

Gen AI is not a good fit for planning and optimization, prediction and forecasting, decision intelligence, and autonomous systems, Sallam said. In each of these categories, she listed examples, explained why gen AI fails in those areas, and suggested alternative techniques.

“Agentic AI” from gen AI vendors offers promise for solving some of the issues, but she said this is now just a work-in-process, and she urged attendees to beware of “agent-washing.”

Sallam said companies need to start with the use case, then pick the tool that works best for it. For a variety of families of use cases, she showed a heat map that lists the suitability of different kinds of common AI techniques that are best-suited for those cases. No technique is perfect, she said, so many people will want to combine different AI techniques.Best Practices in Scaling Generative AI

For those projects that will use gen AI, Gartner’s Arun Chandrasekaran shared some “Best Practices in Scaling Generative AI.” He started this session by repeating a statistic Gartner publicized earlier saying that at least 30% of generative AI projects will fail (which didn’t surprise me much – I think 30% of all big IT projects don’t succeed, at least not on time). He said more recent numbers suggest that as much as 60 to 70% of gen AI projects don’t make it into production. The top reasons for this, he said, are data quality, inadequate risk controls (such as privacy concerns), escalating costs, or unclear business value.

According to another survey, one of the first and most used applications of gen AI is for IT code generation and similar things like testing and documentation, Chandrasekaran said. It’s also being used to modernize applications and other infrastructure and operations areas such as IT security and devops.

The second-most common application is customer service. He said generally this is not customer-facing chatbots now, but rather things that convert customer service calls to text, or perform sentiment analysis on those conversations. We are seeing AI systems help agents better answer customer queries, he said, but generally there is still a human in the loop.

Next up is marketing, from generating calls to creating personalized social media calls. Overall, he said 42% of AI investments are for customer-facing applications.

Chandrasekaran then listed methods for scaling generative AI, beginning with creating a process for determining which use cases have the highest business value and the highest feasibility, and prioritizing those use cases. Then comes the question of build versus buy, but with gen AI that is more nuanced, he said, because there is a range of choices, including applications that have gen AI built in, those that embed APIs, those that extend gen AI with retrieval-augmented generation (RAG), those that used models that are customized via fine-tuning, and finally those that use custom models.

Most customers will choose one of the first three of these, he said, but it’s most important to align the choice with the goals of the application.

From there, you need to create pilots or proof-of-concepts. The goal is to experiment, and find out what works and what doesn’t. He suggested a sandbox environment for testing these out.

To build an application, he said, you’ll want a composable platform architecture, in part so you can use the model that is the most cost-effective at any point in time. Then you’ll want a “responsible AI” initiative including data privacy, model safety including “red teaming”, explainability, and fairness.

Next is investing in data and AI literacy, since so many knowledge workers will be using gen AI tools in the next few years, including things like prompt engineering and understanding what AI is good at and where it has issues. He agreed that “it’s important to know when to use AI and also when not to use gen AI.” Then, he said, you’ll want robust data engineering practices, including tools for integrating your data with gen AI models.

Today, machines and people have an uncomfortable relationship, so you’ll want a process for enabling seamless collaborations among humans and machines. This includes techniques such as keeping a “human in the loop” to vet gen AI system outputs and things like empathy maps.

Then there are special financial operations practices, so you’ll need to understand things like using smaller models, creating prompt libraries, and caching model responses. Model routers can figure out the cheapest model to give you an appropriate response.

Finally, he said companies need to “adopt a product approach” and think of IT as a “product owner” making sure the product is on a continuous update schedule and that it continues to meet people’s needs.AI Strategy and Maturity: Value Lessons From Practice

Gartner’s Svetlana Sicular talked about “AI Strategy and Maturity”, beginning by saying an AI strategy needs to have four pillars: vision, risks, value, and adoption. These pillars balance each other out, she said, noting that without adoption, you won’t deliver on the vision and without managing risk, you won’t get value.

The average mature company has 59 AI use cases in production. But the key first step is to select and prioritize the use cases that make most sense for your organizations. For most, she said, you should start with selecting a series of three to six use cases that all more or less use the same technique. Once you understand one case, the others will go faster, and you’ll be able to determine which ones pan out and which do not. Only 48% of use cases end up in production, she noted.

Sicular said it’s more important to experiment with use cases, not spend your time comparing vendors, because the products will change in six months. And she stressed that the AI solution doesn’t need to be gen AI, just something that adds value. Only after you have use cases and have experimented will you be ready to create a strategy, setting the expectations for your organization or in your budget.