Ever been asked a question you only knew part of the answer to? To give a more informed response, your best move would be to phone a friend with more knowledge on the subject.

Ever been asked a question you only knew part of the answer to? To give a more informed response, your best move would be to phone a friend with more knowledge on the subject.

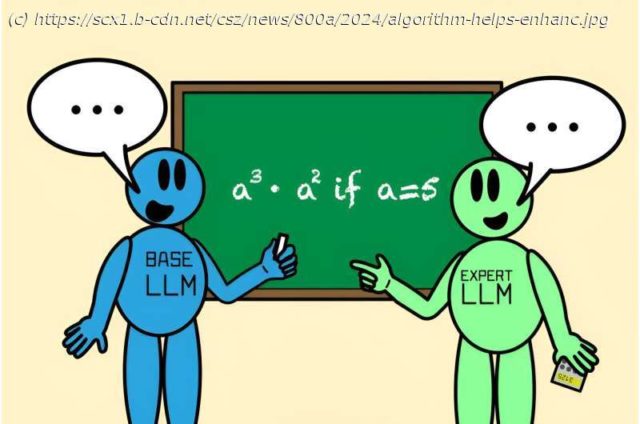

This collaborative process can also help large language models (LLMs) improve their accuracy. Still, it’s been difficult to teach LLMs to recognize when they should collaborate with another model on an answer. Instead of using complex formulas or large amounts of labeled data to spell out where models should work together, researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have envisioned a more organic approach.

Their new algorithm, called “Co-LLM”, can pair a general-purpose base LLM with a more specialized model and help them work together. As the former crafts an answer, Co-LLM reviews each word (or token) within its response to see where it can call upon a more accurate answer from the expert model. This process leads to more accurate replies to things like medical prompts and math and reasoning problems. Since the expert model is not needed at each iteration, this also leads to more efficient response generation.

To decide when a base model needs help from an expert model, the framework uses machine learning to train a “switch variable”, or a tool that can indicate the competence of each word within the two LLMs’ responses. The switch is like a project manager, finding areas where it should call in a specialist.

If you asked Co-LLM to name some examples of extinct bear species, for instance, two models would draft answers together. The general-purpose LLM begins to put together a reply, with the switch variable intervening at the parts where it can slot in a better token from the expert model, such as adding the year when the bear species became extinct.

“With Co-LLM, we’re essentially training a general-purpose LLM to ‘phone’ an expert model when needed”, says Shannon Shen, an MIT Ph.D. student in electrical engineering and computer science and CSAIL affiliate who’s a lead author on a new paper about the approach.

Home

United States

USA — software New algorithm helps enhance LLM collaboration for smarter, more efficient solutions