Technology will continue to evolve, and it will become harder to identify deepfakes. Unless adequate protections and strategies are put in place, it could severely impact businesses.

Some young people floss for a TikTok dance challenge. A couple posts a holiday selfie to keep friends updated on their travels. A budding influencer uploads their latest YouTube video. Unwittingly, each one is adding fuel to an emerging fraud vector that could become enormously challenging for businesses and consumers alike: Deepfakes.

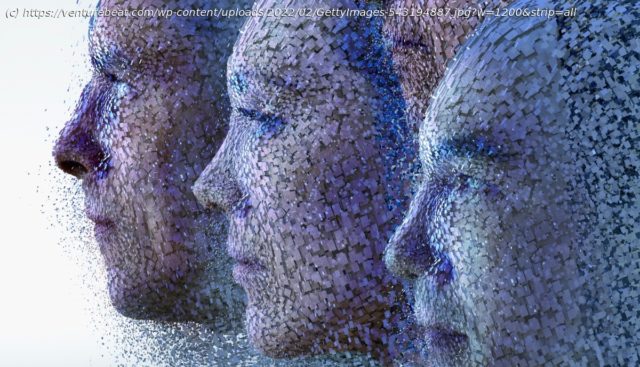

Deepfakes get their name from the underlying technology: Deep learning, a subset of artificial intelligence (AI) that imitates the way humans acquire knowledge. With deep learning, algorithms learn from vast datasets, unassisted by human supervisors. The bigger the dataset, the more accurate the algorithm is likely to become.

Deepfakes use AI to create highly convincing video or audio files that mimic a third-party — for instance, a video of a celebrity saying something they did not, in fact, say. Deepfakes are produced for a broad range of reasons—some legitimate, some illegitimate. These include satire, entertainment, fraud, political manipulation, and the generation of “fake news.”

The threat posed by deepfakes to society is a real and present danger due to the clear risks associated with being able to put words into the mouths of powerful, influential, or trusted people such as politicians, journalists, or celebrities.