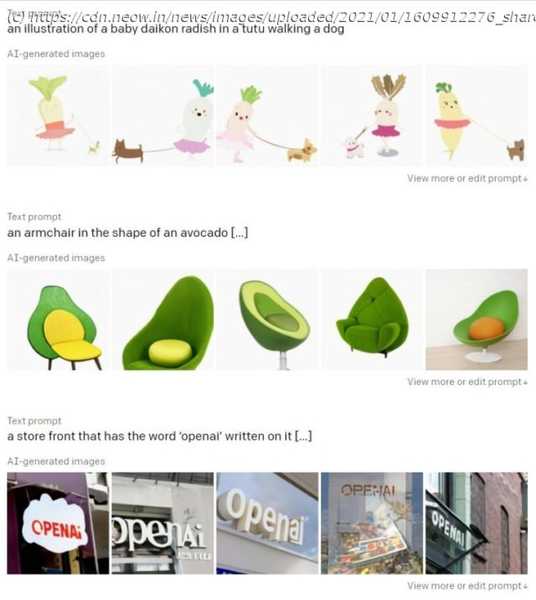

DALL·E, a distilled version of GPT-3 with 12 billion parameters, is a transformer model that produces images from a given caption. The results are impressive with the model capturing minute details.

Last year, OpenAI released GPT-3, the largest transformer model to date with over 175 billion parameters. The model demonstrated great prowess in generating text from a given context and OpenAI licensed it exclusively to Microsoft for providing the computational backend required to host and run the model for its customers. Building on this, OpenAI have announced a distilled,12-billion parameter version of GPT-3 today. Dubbed DALL·E, the new transformer model borrows heavily from GPT-3 but combines its abilities with ImageGPT (a model that completed half-complete images provided as input to the model). As such, DALL·E specializes in generating images from a given caption. The name DALL·E is a portmanteau of the artist Salvador Dalí and Pixar’s famous WALL·E. The model receives a stream of up to 1280 tokens containing both the text and image as a single stream of data. After preprocessing this, the model is then trained using maximum likelihood to generate all tokens sequentially.

Start

United States

USA — software OpenAI's DALL·E borrows from the GPT-3 and creates high-fidelity images from text