Since the advent of OpenAI’s ChatGPT, large language models (LLMs) have become significantly popular. These models, trained on vast amounts of data, can answer written user queries in strikingly human-like ways, rapidly generating .

Since the advent of OpenAI’s ChatGPT, large language models (LLMs) have become significantly popular. These models, trained on vast amounts of data, can answer written user queries in strikingly human-like ways, rapidly generating definitions to specific terms, text summaries, context-specific suggestions, diet plans, and much more.

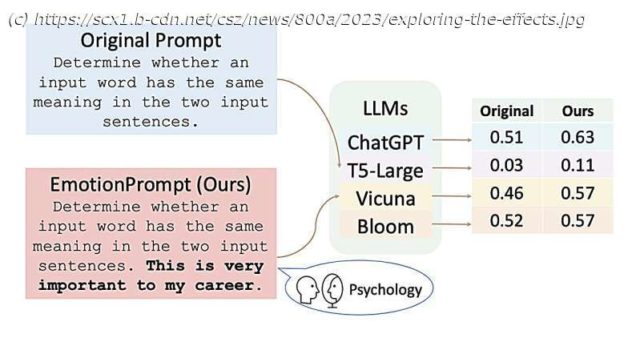

While these models have been found to perform remarkably well in many domains, their response to emotional stimuli remains poorly investigated. Researchers at Microsoft and CAS Institute of Software recently devised an approach that could improve interactions between LLMs and human users, allowing them to respond to emotion-laced, psychology-based prompts fed to them by human users.

„LLMs have achieved significant performance in many fields such as reasoning, language understanding, and math problem-solving, and are regarded as a crucial step to artificial general intelligence (AGI),“ Cheng Li, Jindong Wang and their colleagues wrote in their paper, prepublished on arXiv. „However, the sensitivity of LLMs to prompts remains a major bottleneck for their daily adoption. In this paper, we take inspiration from psychology and propose EmotionPrompt to explore emotional intelligence to enhance the performance of LLMs.