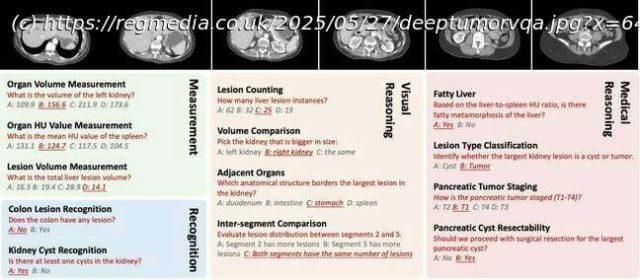

Researchers develop visual model testing benchmark and find models weak for medical reasoning

AI is not ready to make clinical diagnoses based on radiological scans, according to a new study.

Researchers often suggest radiology is a field AI has the potential to transform, because visual or multimodal models can recognize images quite well. The assumption is that AI models should be able to read X-rays and computed tomography (CT) scans as accurately as medical professionals, given enough training.

To test that hypothesis, researchers affiliated with Johns Hopkins University, University of Bologna, Istanbul Medipol University, and the Italian Institute of Technology decided they first had to build a better benchmark test to evaluate visual language models.

There are several reasons for this, explain authors Yixiong Chen, Wenjie Xiao, Pedro R. A. S. Bassi, Xinze Zhou, Sezgin Er, Ibrahim Ethem Hamamci, Zongwei Zhou, and Alan Yuille in a preprint paper [PDF] titled „Are Vision Language Models Ready for Clinical Diagnosis? A 3D Medical Benchmark for Tumor-centric Visual Question Answering.“

The first reason is that most existing clinical data sets are relatively small and lack diverse records, which the scientists attribute to the expense and time required to allow experts to annotate the data.

Second, these data sets often rely on 2D data, which means 3D CT scans sometimes aren’t present for AI to learn from.

Third, algorithms for the automated evaluation machine learning models like BLEU and ROUGE [PDF] don’t do all that well with short, factual medical answers.

Start

United States

USA — software AI models still not up to using radiology to diagnose what ails...