‚It looks sexy but it’s wrong‘ – like the improbably well-endowed rat

Biomedical visualization specialists haven’t come to terms with how or whether to use generative AI tools when creating images for health and science applications. But there’s an urgent need to develop guidelines and best practices because incorrect illustrations of anatomy and related subject matter could cause harm in clinical settings or as online misinformation.

Researchers from the University of Bergen in Norway, the University of Toronto in Canada, and Harvard University in the US make that point in a paper titled, „‚It looks sexy but it’s wrong.‘ Tensions in creativity and accuracy using GenAI for biomedical visualization“, scheduled to be presented at IEEE’s Vis 2025 conference in November.

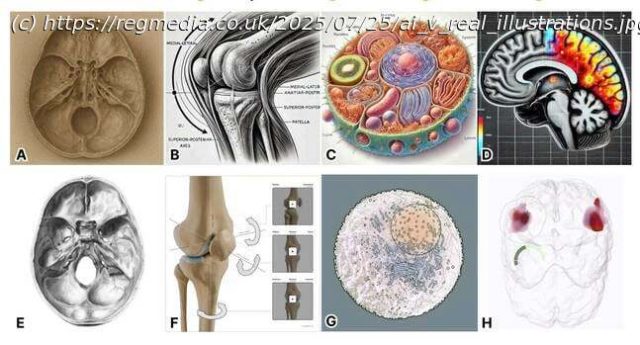

In their paper, authors Roxanne Ziman, Shehryar Saharan, Gaël McGill, and Laura Garrison present various illustrations created by OpenAI’s GPT-4o or DALL-E 3 alongside versions created by visualization experts.

Some of the examples cited diverge from reality in subtle ways. Others, like „the infamously well-endowed rat“ in a now-retracted article published in Frontiers in Cell and Developmental Biology, would be difficult to mistake for anything but fantasy.

Either way, imagery created by generative AI may look nice but isn’t necessarily accurate, the authors say.

„In light of GPT-4o Image Generation’s public release at the time of this writing, visuals produced by GenAI often look polished and professional enough to be mistaken for reliable sources of information“, the authors state in their paper.

„This illusion of accuracy can lead people to make important decisions based on fundamentally flawed representations, from a patient without such knowledge or training inundated with seemingly accurate AI-generated ’slop‘, to an experienced clinician who makes consequential decisions about human life based on visuals or code generated by a model that cannot guarantee 100 percent accuracy.“

Co-author Ziman, a PhD fellow in visualization research at the University of Bergen, told The Register in an email, „While I’ve not yet come across real-world examples where AI-generated images have directly resulted in harmful health-related outcomes, one interview participant shared with us this case involving an AI-based risk-scoring system to detect fraud and wrongfully accused (primarily foreign parents) of childcare benefits fraud in the Netherlands.

„With AI-generated images, the more pervasive issue is the use of inaccurate imagery in medical and health-related publications, and scientific research publications broadly.

Start

United States

USA — software Seeing is believing in biomedicine, which isn't great when AI gets it...