OpenAI’s testing found a 1.6% chance of Sora creating sexual deepfakes while using a person’s likeness, despite the safeguards in place.

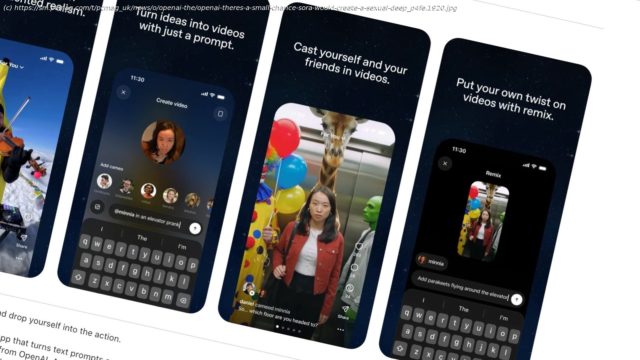

OpenAI’s Sora app has been wowing the public with its ability to create AI-generated videos using people’s likenesses, but the company admits the technology carries a small risk of producing sexual deepfakes.

OpenAI mentioned the issue in Sora’s system card, a safety report about the AI technology. By harnessing facial and voice data, Sora 2 can generate hyper-realistic visuals of users that are convincing enough to be difficult to distinguish from lower-quality deepfakes.

OpenAI says Sora 2 includes “a robust safety stack“, which can block it from generating a video during the input and output phases, or when the user types in a prompt and after Sora generates the content.

Even with the safeguards, though, OpenAI found in a safety evaluation that Sora only blocked 98.4% of rule-breaking videos that contained “Adult Nudity” or “Sexual Content” using a person’s likeness. The evaluation was conducted “using thousands of adversarial prompts gathered through targeted red-teaming,” the company said, adding: „While layered safeguards are in place, some harmful behaviors or policy violations may still circumvent mitigations.

Start

United States

USA — software OpenAI: There's a Small Chance Sora Would Create a Sexual Deepfake of...