In this article, we will breakdown what ETL is, how it works, and how your organization can benefit from ETL. ETL stands for Extract, Transform and Load, …

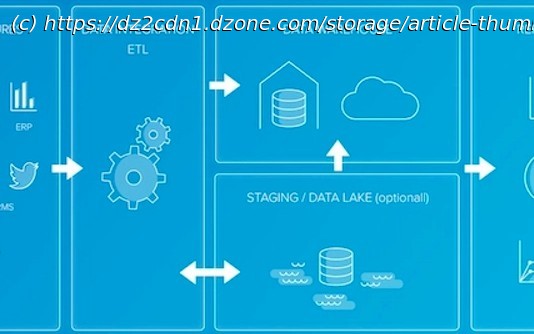

Join the DZone community and get the full member experience. These days, companies have access to more data sources and formats than ever before: databases, websites, SaaS (software as a service) applications, and analytics tools, to name a few. Unfortunately, the ways businesses often store this data make it challenging to extract the valuable insights hidden within — especially when you need it for smarter data-driven business decision-making. Standard reporting solutions such as Google Analytics and Mixpanel can help, but there comes a time when your data analysis needs to outgrow capacity. At this point, you might consider building a custom business intelligence (BI) solution, which will have the data integration layer as its foundation. First emerging in the 1970s, ETL remains the most widely used method of enterprise data integration. But what is ETL exactly, and how does ETL work? In this article, we drill down to what it is and how your organization can benefit from it. ETL stands for E xtract, T ransform and L oad, which are the three steps of the ETL process. ETL collects and processes data from various sources into a single data store (e.g., a data warehouse or data lake), making it much easier to analyze. In this section, we’ll look at each piece of the extract, transform and load process more closely. Extracting data is the act of pulling data from one or more data sources. During the extraction phase of ETL, you may handle a variety of sources with data, such as: We divide ETL into two categories: batch ETL and real-time ETL (a.k.a. streaming ETL). Batch ETL extracts data only at specified time intervals. With streaming ETL, data goes through the ETL pipeline as soon as it is available for extraction. It’s rarely the case that your extracted data is already in the exact format that you need it to be. For example, you may want to: All these changes and more take place during the transformation phase of ETL. There are many types of data transformations that you can execute, from data cleansing and aggregation to filtering and validation. Finally, once the process has transformed, sorted, cleaned, validated, and prepared the data, you need to load it into data storage somewhere. The most common target database is a data warehouse, a centralized repository designed to work with BI and analytics systems. Google BigQuery and Amazon Redshift are just two of the most popular cloud data warehousing solutions, although you can also host your data warehouse on-premises. Another common target system is the data lake, a repository used to store « unrefined » data that you have not yet cleaned, structured, and transformed. When an ETL process is used to move data into a data warehouse, a separate layer represents each phase: Mirror/Raw layer: This layer is a copy of the source files or tables, with no logic or enrichment. The process copies and adds source data to the target mirror tables, which then hold historical raw data that is ready to be transformed. Staging layer: Once the raw data from the mirror tables transform, all transformations wind up in staging tables. These tables hold the final form of the data for the incremental part of the ETL cycle in progress. Schema layer: These are the destination tables, which contain all the data in its final form after cleansing, enrichment, and transformation. Aggregating layer: In some cases, it’s beneficial to aggregate data to a daily or store level from the full dataset. This can improve report performance, enable the addition of business logic to calculate measures and make it easier for report developers to understand the data. ETL saves you significant time on data extraction and preparation — time that you can better spend on evaluating your business. Practicing ETL is also part of a healthy data management workflow, ensuring high data quality, availability, and reliability. Each of the three major components in the ETL saves time and development effort by running just once in a dedicated data flow: Extract: Recall the saying « a chain is only as strong as its weakest link. » In ETL, the first link determines the strength of the chain. The extract stage determines which data sources to use, the refresh rate (velocity) of each source, and the priorities (extract order) between them — all of which heavily impact your time to insight. Transform: After extraction, the transformation process brings clarity and order to the initial data swamp. Dates and times combine into a single format and strings parse down into their true underlying meanings. Location data convert to coordinates, zip codes, or cities/countries. The transform step also sums up, rounds, and averages measures, and it deletes useless data and errors or discards them for later inspection. It can also mask personally identifiable information (PII) to comply with GDPR, CCPA, and other privacy requirements. Load: In the last phase, much as in the first, ETL determines targets and refresh rates.