By commenting, you agree to the

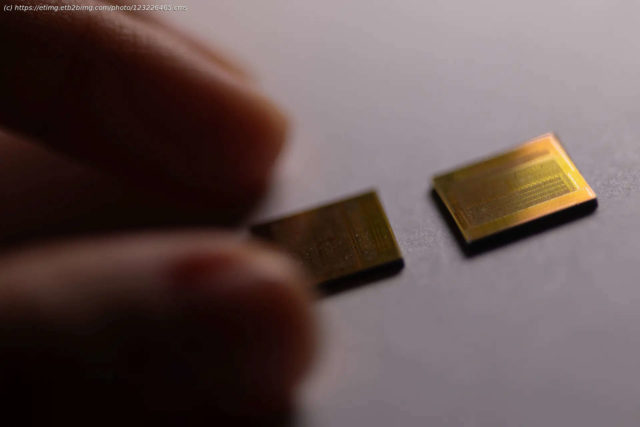

A highest-capacity, high-bandwidth 16-High HBM3E memory chip from SK Hynix is seen at this studio photograph in San Francisco, California, U.S. August 6, 2025. REUTERS/Carlos BarriaBy Heekyong Yang and Max A. Cherney SEOUL/South Korea’s SK Hynix forecasts that the market for a specialized form of memory chip designed for artificial intelligence will grow 30% a year until 2030, a senior executive said in an interview with Reuters. The upbeat projection for global growth in high-bandwidth memory (HBM) for use in AI brushes off concern over rising price pressures in a sector that for decades has been treated like commodities such as oil or coal. « AI demand from the end user is pretty much, very firm and strong », said SK Hynix’s Choi Joon-yong, the head of HBM business planning at SK Hynix. The billions of dollars in AI capital spending that cloud computing companies such as Amazon, Microsoft and Alphabet’s Google are projecting will likely be revised upwards in the future, which would be « positive » for the HBM market, Choi said. The relationship between AI build-outs and HBM purchases is « very straightforward » and there is a correlation between the two, Choi said. SK Hynix’s projections are conservative and include constraints such as available energy, he said. But the memory business is undergoing a significant strategic change during this period as well.