The first thing to look at to start optimizing a query is the Query Planner. In this post, we explain how a query gets executed and how to understand the …

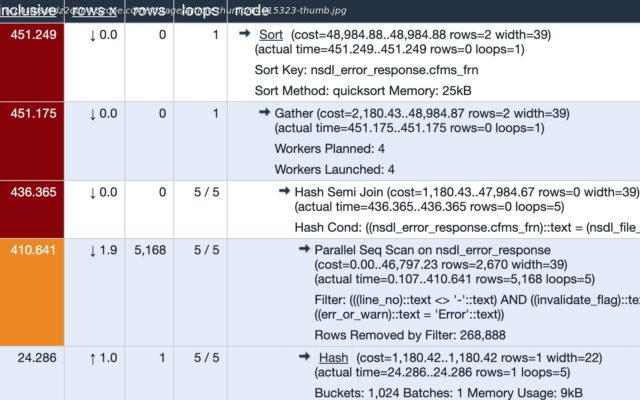

Join the DZone community and get the full member experience. Understanding the PostgreSQL query plan is a critical skill set for developers and database administrators alike. It is probably the first thing we would look at to start optimizing a query, and also the first thing to verify and validate if our optimized query is indeed optimized the way we expect it to be. Before we attempt to read a query plan it is important to ask some very basic questions: Every query goes through different stages and it is important to understand what each stage means to the database. The second phase would be to translate the query to an intermediate format known as the parse tree. Discussing the internals of the parse tree would be beyond the scope of this article, but you can imagine it is like a compiled form of an SQL query. The third phase is what we call the re-write system/rule system. It takes the parse tree generated from the second stage and re-writes it in a way that the planner/optimizer can start working in it. The fourth phase is the most important phase and the heart of the database. Without the planner, the executor would be flying blind for how to execute the query, what indexes to use, whether to scan a smaller table to eliminate more unnecessary rows, etc. This phase is what we will be discussing in this article. The fifth and final phase is the executor, which does the actual execution and returns the result. Almost all database systems follow a process that is more or less similar to the above. Let’s set up some dummy table with fake data to run our experiments on. And then fill this table with data. I used the below Python script to generate random rows. The script uses the Faker library to generate fake data. It will generate a csv file at the root level and can be imported as a regular csv into PostgreSQL with the below command. Since id is serial it will get automatically filled by PostgreSQL itself. The table now contains 1119284 records. Most of the examples below will be based on the above table. It is intentionally kept simple to focus on the process rather than table/data complexity. The below examples use the Arctype editor. The featured image of the post comes from the Depesz online Explain tool. PostgreSQL and many other database systems let users see under the hood of what is actually happening in the planning stage. We can do so by running what is called an EXPLAIN command. Let’s proceed to a more interested metric called BUFFERS. This explains how much of the data came from the PostgreSQL cache and how much had to fetch from disk. Buffers: shared hit=5 means that five pages were fetched from the PostgreSQL cache itself. Let’s tweak the query to offset from different rows. Buffers: shared hit=7 read=5 shows that 5 pages came from disk.