This article is the missing step-by-step tutorial needed to glue all the pieces of Consul, Nomad, and Vault together.

Join the DZone community and get the full member experience.

This article explains how to build a secure platform using HashiCorp’s stack; many of the steps are well documented, but we missed hints to iron out the wrinkles found in the process; here, we want to show how to glue together the whole procedure. We will highlight in detail the most critical parts explaining where we found issues and how we solved them, and leave references on the official tutorials, if sufficient, to prevent this article from getting too long and difficult to follow.

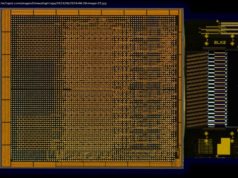

As shown in the picture below, the physical architecture we want to achieve is composed of a Consul and Nomad cluster of five nodes: three of them are Control Plane nodes configured for High Availability (HA), while the remaining two are Data Plane nodes.

Given that we are in an edge environment, the final location of cluster servers is key:

Control plane nodes (servers) are distributed on the fair’s pavilions or installed on a dedicated cabinet in the main building

Data plane nodes (clients) are physically located on every pavilion

By default, the server’s role is to maintain the state of the clusters while clients serve users which are physically located in proximity of them.

In the era of Automation and Virtualization, the way to proceed is to create a «test and destroy» virtualized environment in order to try our configurations before going into production and deploy everything on the physical infrastructure.

The DevOps toolbox, composed of tools such as Ansible, Terraform, and Git, has been a strict requirement in the process of evolving and refining the configuration and procedure for setting up such a complicated environment. Ansible Vault is our choice to ensure that sensitive content related to specific environments, such as passwords, keys, configuration variables, and bootstrapping tokens or TLS certificates, are protected and encrypted rather than visible in plain text from the Git repository where we store code for building the infrastructure.

We start by creating five virtual machines. For our tests, we used the following specs:

OS: Ubuntu 22.04 LTS

Disk: 20GB

Memory: 2GB

vCPU: 2

You can choose your preferred Public Cloud provider and deploy a bunch of virtual machines with Terraform there, or alternatively, to speed up the deployment process, you could create them on your laptop using VirtualBox and Vagrant, but finally, you will end up with an Ansible inventory looking similar to this one:

In this bootstrapping phase, the Open Source Ansible Community provides a library of extensively tested and secure public playbooks. Thanks to the Ansible Galaxy utility, we can pull the code of two roles for deploying, respectively, Consul and Nomad.

We crafted the above roles into a proprietary playbook that, first of all, configures and setup the server networking with a dummy network interface. That interface is mapped to the reserved IP address 169.254.1.1 and used to resolve *.consul domain names in the cluster, let’s call it the dummy0 interface. We rely on Ansible to configure it on target nodes, and at the end, we will have the following file: /etc/systemd/network/dummy0.network on every server:

In addition, the playbook configures a few basic customizations on servers like updating the OS, setting up node’s users importing the related SSH authorized_keys, installing the container runtime, and some debugging CLI tools.

Finally, you should take care in configuring Consul and Nomad roles by setting proper Ansible variables. Most of their names are self-explanatory, but for sure you have to specify the consul_version and nomad_version (in our case, respectively 1.12.2 and 1.2.8). The three client nodes should use consul_node_role and nomad_node_role variables, while the others should use the server. Another useful variable to set is nomad_cni_enable: true because, otherwise, you will have to manually install CNI on any Nomad node. More information about how to configure the roles for your specific case is available on the related GitHub pages.

In this way, we are also able to support, with minimal effort, the rapid provisioning and deployment of different environment inventories varying from production bare-metal servers or cloudlets at the edge to development and testing virtual machines in the cloud.

At this point, you have a Consul + Nomad cluster ready, and you should be able to access the respective UI and launch some testing workload. If everything is working fine, it comes time to provision the Vault cluster, which for simplicity and exclusively for this testing phase, will be installed in HA on the very same server nodes where Nomad and Consul control planes run.

If you are a Vault beginner, start here and here playing a little bit with it, then you can move to the Ansible Vault role to install this component, but before jumping into the playbook magic, a few manual steps are needed in order to bootstrap the PKI for our Vault cluster.

You can rely on an existing PKI configuration if your company provides it. However, we will start from scratch, creating our Root Certification (CA) Authority and Certificates.

First, create the root key (this is the CA private key and can be used to sign certificate requests), then use the root key to create the root certificate that needs to be distributed to all the clients that have to trust us. You can tune the value for the -days flag depending on your needs.

You will have to customize the fields on prompts, for instance, with something like: C=IT, O=CompanyName, CN=company.com/emailAddress=example@company.com and optionally check the root CA certificate with:

At this point, we need to create a certificate for every server, appliance, or application that needs to expose a TLS-encrypted service via a certificate that is trusted by our CA.

Домой

United States

USA — software How To Use Hashicorp Tools To Create a Secured Edge Infrastructure